Explainable AI (XAI): Making AI Decisions Transparent.

Artificial Intelligence (AI) is

transforming industries—from healthcare and finance to self-driving cars and

customer service. But as AI systems grow more complex, a critical question

arises: How do these models make decisions? If an AI denies a loan application,

misdiagnoses a disease, or rejects a job candidate, can we trust its reasoning?

This is where Explainable AI

(XAI) comes in. XAI aims to peel back the "black box" of AI, making

its decision-making process transparent, interpretable, and trustworthy. In

this article, we’ll explore why XAI matters, how it works, real-world

applications, and the challenges it faces.

Why Explainability Matters in AI?

Imagine a doctor using an AI

system to diagnose cancer. The AI recommends aggressive treatment, but when

asked why, it simply responds: "Trust me." Would the doctor—or the

patient—accept that blindly? Probably not.

The Black Box Problem

Many advanced AI models,

particularly deep learning systems, operate as black boxes—they take inputs,

process them through hidden layers, and produce outputs without revealing their

reasoning. While they often perform with high accuracy, their lack of

transparency raises concerns:

·

Accountability:

If an AI makes a harmful decision, who is responsible?

·

Bias

& Fairness: How do we know if the AI is discriminating against certain

groups?

·

Regulatory

Compliance: Laws like the EU’s GDPR grant users the "right to

explanation" for automated decisions.

·

User

Trust: People are more likely to adopt AI if they understand how it works.

A 2022 survey by Deloitte found

that 67% of business leaders consider explainability a top priority when

deploying AI. Without it, AI adoption faces resistance—especially in

high-stakes fields like medicine, law, and finance.

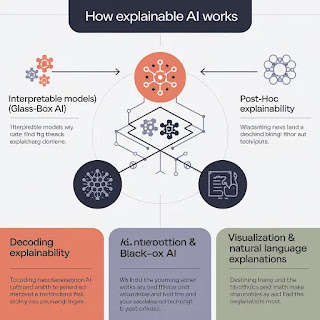

How Explainable AI Works?

XAI isn’t a single technique but a collection of methods designed to make AI decisions interpretable. These approaches vary depending on the AI model:

1. Interpretable

Models (Glass-Box AI)

Some AI models are inherently

explainable because of their simple structure:

·

Decision

Trees: Follow a flowchart-like structure where each decision is traceable.

·

Linear

Regression: Shows how input features directly influence the output.

·

Rule-Based

Systems: Use predefined logical rules (e.g., "If credit score >

700, approve loan.").

While these models are

transparent, they often lack the complexity needed for high-performance tasks.

2. Post-Hoc

Explainability (Decoding Black-Box AI)

For more complex models like

neural networks, we use techniques to interpret decisions after the fact:

·

Feature

Importance: Identifies which inputs most influenced the decision (e.g.,

"Your loan was denied due to low income and high debt.").

· LIME (Local Interpretable Model-Agnostic Explanations): Creates a simplified version of the model to explain individual predictions.

·

SHAP

(SHapley Additive exPlanations): Borrows from game theory to quantify each

feature’s contribution to the outcome.

For example, if

an AI rejects a mortgage application, SHAP could reveal that credit history had

a 50% impact, while employment status contributed 30%.

3. Visualization

& Natural Language Explanations

Humans understand visuals and

narratives better than raw data. XAI tools often generate:

·

Saliency

Maps: Highlight which parts of an image a neural network focused on (used

in medical imaging).

·

Counterfactual

Explanations: Show what small changes would reverse a decision (e.g.,

"Your loan would be approved if your income increased by $5,000.").

Real-World Applications of XAI

1. Healthcare:

Trusting AI Diagnoses

In 2020, an AI model at Mayo Clinic predicted patient heart risks with 90% accuracy—but doctors hesitated to use it without understanding why. By applying XAI, researchers found the model relied on subtle ECG patterns invisible to humans, increasing physician trust.

2. Finance: Fair

Lending & Fraud Detection

Banks like JPMorgan Chase use XAI

to explain credit decisions, ensuring compliance with fair lending laws.

Similarly, AI fraud detection systems must justify why a transaction was

flagged—preventing false accusations.

3. Criminal Justice:

Reducing Algorithmic Bias

ProPublica’s investigation

revealed that COMPAS, an AI used in court sentencing, was biased against Black

defendants. XAI techniques later exposed flaws in its training data, leading to

reforms.

4. Autonomous

Vehicles: Explaining Life-or-Death Decisions

If a self-driving car swerves to

avoid a pedestrian, regulators and passengers need to know why. XAI helps

engineers audit these decisions for safety and ethical compliance.

Challenges & Limitations of XAI

Despite its promise, XAI isn’t a magic bullet:

·

Trade-Off

Between Accuracy & Explainability: The most accurate AI models (e.g.,

deep learning) are often the least interpretable.

·

Over-Simplification:

Explanations might miss nuanced reasoning, leading to false confidence.

·

"Explanation

Washing": Some companies use XAI as a PR tool without addressing

underlying biases.

Experts like Cynthia Rudin (Duke

University) argue that we should prioritize interpretable models from the start

rather than trying to explain black-box systems retroactively.

The Future of Explainable AI

As AI becomes more embedded in society, the demand for transparency will only grow. Key future trends include:

·

Regulatory

Push: Governments are mandating XAI in sectors like healthcare and finance.

·

Human-AI

Collaboration: Tools that let users interrogate AI in real time.

·

Self-Explaining

AI: Next-gen models designed to be inherently interpretable without

sacrificing performance.

Conclusion: Transparency Builds Trust

AI won’t reach its full potential

unless people trust it. Explainable AI bridges the gap between cutting-edge

technology and human understanding—ensuring that AI decisions are fair,

accountable, and justifiable.

Whether you’re a doctor, a loan

officer, or just someone curious about AI, XAI empowers you to ask: "How

did you arrive at that decision?"—and get a meaningful answer.

As we move forward, the goal

isn’t just smarter AI—it’s AI we can understand, question, and ultimately,

trust.

Would you like a deeper dive into any specific XAI technique or industry application? Let me know in the comments!